Using Head-Related Transfer Functions (HRTFs)

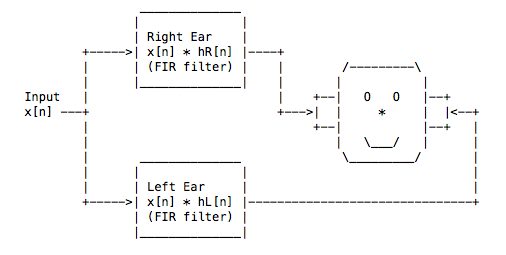

First, HRTFs are adorable:

(ASCII from the MIT KEMAR FAQ, circa 1997, on how to apply HRTF impulse responses.)

My time spent playing around with cSound was delightful and a little sad. There’s so much more I could learn, and a lot of that is fundamental to really understanding signal processing, digital instrumentation, and computer music. But, I have learned a lot about actually using HRTFs, as one of the things I toyed with while building a little game called sound_fall. While they didn’t work out for that project, I still wanted to dig around a little more, and share some of what I found (since real discussion of HRTFs is lacking).

If you’re interested in cSound, the online manual is very useful, if a bit dense. Looking through the examples included in the pages for the HRTF opcodes was more immediately enlightening, at least from getting immediate use. The relevant opcodes here are hrtfer, hrtfmove, hrtfmove2, and hrtfstat.

To get a feel for this, I’ve made a simple .csd file—the file that contains the instrument and score information for cSound. It plays a fractal phased sine wave that I made in Audacity.

<CsoundSynthesizer>

<CsOptions>

; non-realtime output

-o hrtf.wav

</CsOptions>

<CsInstruments>

sr = 44100 ; standard sample rates

kr = 4410

ksmps = 10

nchnls = 2 ; final output is stereo

instr 10

kaz line 0, p3, 180 ; move azimuth from front around right to back

kelev line 50, p3, -30 ; move elevation in arc from 50 to -30 deg

; (above you to below you)

ain soundin "fast_tone.wav" ; input sound

; set left and right channels to results of the hrtfmove2 opcode

; this has less clicking, especially when used for real-time synthesis

; this processes ain, with azimuth 0, the predefined elevation arc,

; and the two HRTF data files (that contain the impule responses)

;aleft,aright hrtfmove2 ain, 0, kelev, "hrtf-44100-left.dat","hrtf-44100-right.dat"

; This does the same, but with the predefined azimuth arc and an

; elevation of zero.

aleft,aright hrtfmove2 ain, kaz, 0, "hrtf-44100-left.dat","hrtf-44100-right.dat"

outs aleft, aright

endin

</CsInstruments>

<CsScore>

i10 0 20 ; play for 20 seconds

e

</CsScore>

</CsoundSynthesizer>I tried to comment the file enough to make it readable, even if you don’t really know what’s going on. To use it, you’ll need to have cSound installed, and grab this pack I made with the .csd, fast_tone.wav, and the HRTF data files.

Then, from a terminal, invoke csound: $ csound simple_hrtf.csd

This will output a file named hrtf.wav. Try listening to it with decent headphones:

You may notice that you need to pay a bit of attention to where the sound is coming from to really get the effect. The sound I used has enough edge and variation that the motion in space is more noticeable than a more solid tone might be under the same transformations. You can pass the hrtfmove2 opcode any mono input, so experimentation is easy.

The actual math for using the HRTF datafiles involves FFTs and discrete-time convolutions—since I skipped out of being an electrical engineer, I’ve avoided needing to learn about things like that, but I can’t help being curious. If I was a little more competent, I might try to build an extension for OpenAL (which is woefully neglected these days, from the looks of it) to add this functionality. That would make it a little easier to integrate into applications that aren’t music synthesis oriented. Oh well.

Moving on from here, I’d really like to find a good system for real-time synthesis. cSound seems like a possibility, but it isn’t really designed for it from the ground up—the HRTF example here is outputted to a wavefile to avoid buffer and clicking issues.