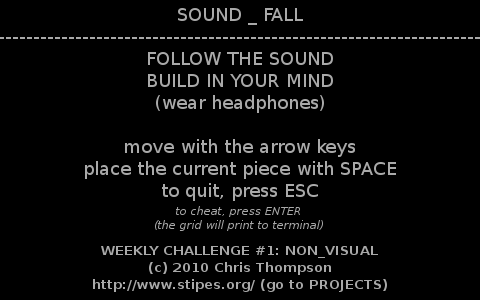

SOUND _ FALL : build with sound

A friend and I had the idea of building games (defined loosely) that didn’t use sight as the primary medium. On a standard PC, this generally limits you to only sound.

This project is available on GitHub.

Place blocks of sounds without using your eyes.

Download, start it, and then close your eyes. See what you can build using only your ears.

This game/vignette was created using Python (tested in version 2.6) and PyGame.

This was a surprisingly long haul for only four days of working on it on and off. I’ve learned more than I think I’ll want to know in a long time about 3D positional audio.

Some of the good things I’ve learned:

- PyGame is actually well-built and stable. They know how to handle sampe buffers.

- Pyglet is definitely a possibility for future endeavors. It’s basically an extension of PyGame for better 3D support.

- Python is, as always for me, a great prototyping language.

- HRTFs are awesome.

But some things I had to learn the hard way:

- OpenAL is a behemoth that I wish worked better. The fact that all of the Python bindings are terribly old and poorly documented doesn’t help.

- Keep things as simple as possible, even if the overall idea is really complicated. OpenAL was way more than this project needed. I ended up building a custom panning system (since PyGame’s mixer only supports Left/Right channel volumes) that was far simpler, and it did everything I needed it to.

- Humans don’t have high enough resolution for audiospatial recognition: we really distribute sounds within eight regions of the sphere (from my experience). The rest of what we think we might be sensing is due to other cues.

The fact that humans can’t pinpoint sounds in 3D space solely based on the sound was my primary sticking point.

I had originally wanted to implement this only using 3D positional audio with HRTFs—Head-Relational Transfer Functions. I thought this would let me cue the position of blocks around the player, but it just didn’t end up cutting it. But that doesn’t stop HRTFs from being really awesome.

If you aren’t familiar with HRTFs and care about audio production in any capacity, you should check them out. Head-Relational Transfer Functions (HRTFs) are signals that you can convolute a mono input against to get the actual signals that a human would hear in each ear. MIT made a database of them, called KEMAR, back in the ’90s using a dummy head and special microphones. I’m still not comfortable trying to use them by hand, but cSound has HRTF support for static and moving positional sounds, which is really cool. I wanted to try to hack together using the command-line interface to cSound through Python to build this system, but HRTFs just weren’t enough. Darn.